In the evolving era of AI (Artificial Intelligence), you can find optimistic progression and challenging complications as well that require being stunned. One such challenge is the appearance of an AI-powered tool designed to ease cybercrime known as FraudGPT.

This tool operates on dark web marketplaces and stances potential risks to businesses, civilization, and individuals at great. So, we can say that this tool is an AI of the dark web.

In this article, we have described every detail related to this tool, like how it works and its features subscription. We have also described how cybercriminals benefit from this AI tool and how you can protect yourself from this malicious AI.

Do you know about FraudGPT?

FraudGPT is a new ChatGPT style (Artificial Intelligence) AI fraud subscription-built tool. It is powered by a large language model that is mostly fine-tuned to service cyber criminals oblige cybercrime. This Tool lets the threat actors facilitate their criminals’ activities like selling data, cracking tools, phishing emails, and malware creation.

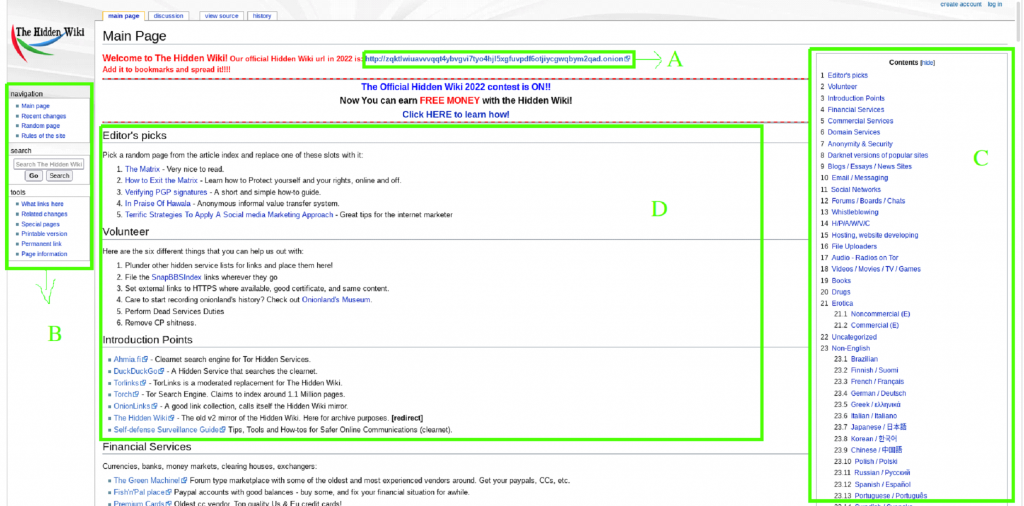

In July 2023, researchers from A security research company (Netenrich) discovered that there are several ads on the dark web marketplaces and Telegram channels advertising this AI tool. The main point of the advertisement was that the Tool does not have built-in controls and restrictions that prevent ChatGPT from responding to inapt queries from users.

Moreover, the second main point of the advertisement was that the Tool is updated every 10 to 15 days. The Tool also features different AI models in its internal workings. So, to better understand this Tool, you can think of this Tool as ChatGPT but not for fraud. However, FraudGPT works as Dark web AI, but ChatGPT does not.

Is FraudGPT Just Like ChatGPT?

When the ChatGPT was launched, it could be used to do anything, including helping cybercriminals make malware attacks. Because ChatGPT’s fundamental language model was proficient on a dataset that likely confined samples of a wide variety of data, including the data that could be used for criminal projects.

The large language models basically fed everything from the good stuff, like science, health, technology politics, to the bad ones, like malware codes, messages from carding and phishing campaigns, and other criminal materials.

When dealing with the types of datasets, it is required to train language models like the kind that influences ChatGPT. It is almost predictable that the model would contain samples of unsolicited datasets.

Scorn typically makes careful efforts to remove the unsolicited materials in the dataset. Some slip through, and they are typically enough to give the model the facility to make materials to help cybercrime. That is why, with the accurate prompt, engineering users can get tools like ChatGPT to help them write emails or computer malware.

Suppose tools like ChatGPT can help threat actors oblige crimes in spite of all efforts to make the other AI chatbots innocuous. Now think of the power a tool like FraudGPT could bring, seeing it was specifically perfected on malicious materials to make it appropriate for cybercrime.

Consequently, we can say that FraudGPT is like the criminal twin of ChatGPT put on steroids. The chic algorithm of FraudGPT lets it recognize user intent better than ChatGPT, which makes it difficult for users to differentiate between real and bogus content produced by the model.

How Does FraudGPT Work

Basically, this tool is not significantly different from any other tool that is power-driven by the large language AI model. The security research team of Netenrich procured and tested this tool.

According to the research team tool has an interactive user interface for criminals to access the language model that has been modified for obliging cybercrimes. At the same time, the layout is pretty similar to ChatGPT’s, with a history of user requests in the left panel.

The chat window takes up most of the screen real estate. If a user wants to get an answer, users have to simply type their query into the provided search field and then click on the enter button.

Netenrich company tests the trial in this Tool. The test case was a phishing email related to a bank. In the test, the team grasped that the user input was negligible. The team asked the Tool to create an email that could be used as a part of a business email negotiated scam with a supposed CEO writing to an account manager to say an urgent payment was required. After that, the system made an email that was not only remarkably influential but also calculating. Including the bank name in the request, format was all that was essential for the Tool to complete the task.

It shows where a malicious link could be located in the text. Scam landing pages that actively ask for personal data from visitors are also within the capabilities of this Tool.

However, this AI tool was also requested to name the most frequently stayed or exploited online possessions. This data could be useful for hackers to use in arrangements for future attacks.

Moreover, the online ad for this Tool on the dark web shows that it could produce harmful code to accumulate untraceable malware to search for vulnerabilities and identity targets. The team also exposed that the seller of this AI tool had formerly advertised hacking services for hire. They also linked the same person to an analogous tool called WarmGPT.

Unique Features of the ChatGPT Twin

Threat actors and hackers claim to have made their version of text-generating technology as like ChatGPT chatbot, which is a copycat hacker tool called FraudGPT. Here, we have shared the features of this chatbot.

- Create hacking tools

- Create untraceable malware

- Create phishing pages

- 24/7 Escrow

- Write malicious codes

- Write scam pages/letters

- Find Non-VBV Bins

- Find Card able websites

- Find criminal groups, websites, and markets

- Find leaks vulnerabilities

- 3000+ confirmed sales and reviews

- Learn to code or hack

Subscription Plans of FraudGPT

Here, we have shared all the membership options offered by this AI: Artificial

| Duration | Cost |

| 1 Month plan | $200 |

| 3 Months plan | $450 |

| 6 months plan | $1000 |

| 12 months plan | $1700 |

Is FraudGPT Dark Web AI (Artificial Intelligence)?

As mentioned above, this Tool gives a range of nefarious activities like creating fraud emails, executing sophisticated phishing campaigns, generating malicious code, discovering vulnerabilities, writing scam letters, and more. Apart from this extensive list of skills, the main function of this Tool centers around helping create credible phishing campaigns.

However, ads on the dark web signify FraudGPT’s proficiency in drafting enticing emails that persuade recipients to interact with malicious links. It may herald a worrying new era of AI-powered cyber-attacks with an expected surge in malicious ChatGPT derivatives on both the surface web and the dark web. The automation of the Tool makes phishing emails appear more convincing than ever.

Ads of FraudGPT on Dark Web Marketplaces

After the launch of this Tool on 23 June 2023, cybercriminals established telegram channels. In these channels, the threat actors pose as substantiated sellers on dark web marketplaces. The threat actors opted for telegram channels to offer services seamlessly that anticipated frequent exit scams in marketplaces. The marketplaces include;

- Versus

- World

- AlphaBay

- Empire

- Torrez

- WHM

This Tool Begins a New Era of Digital Risks

FraudGPT has the tagline “Bot without Limitation, Rules, and Boundaries.” It already states about the potential risks. This chatbot has been moving conversations on the dark web vendor shops and also making the rounds on telegram channels for the dark web.

The Tool contributes to malevolent entities in phishing emails, cracking hack tools, creating fake identities, and discovering carding websites. However, as per the cybersecurity researchers’ cybercriminals were using this Tool to execute cybercrimes, and it even wrote codes for scam pages. Moreover, the vendor of the Tool claims to be active on numerous dark web vendor shops and is also active on Telegram.

The developers of FraudGPT built it with an evil determination of the mind, making it, not the good kind of AI: Artificial Intelligence tool. The digital risks have been significant enough that even SEC: Securities and Exchange Commission Chair Gary Gensler has stated about the AI (Artificial intelligence) risks. Furthermore, he considers transformative technology, though numerous problems need addressing.

How you can Protect yourself from the Malicious FraudGPT

The examination of FraudGPT highlights the status of maintaining an attentive stance. Given the uniqueness of these tools, it remains undefined when hackers might influence them to create previously hidden threats or if they have already done so.

Yet, FraudGPT and other similar chatbots become increasingly sophisticated. They actually made for evil determinations that could significantly advance hackers’ activities, allowing them to make up phishing emails or craft complete landing pages in seconds.

As a result, individuals must continue observing cybersecurity best practices, which include perpetually protecting suspicion toward requests or personal data. So, your defense mechanism has to stay one step ahead.

If you want to do so, follow these tips to make sure you remain secure from any malicious AI chatbot software.

1. Train your Employees

Sometimes, the Artificial intelligence chatbots for a business become malicious. As human executes the AI, companies have to make sure that their employees are well-skilled in identifying the potential of any cyber threat.

2. Always Prioritize Cybersecurity Updates

It is important to stay updated, and it can’t be stressed enough. Staying updated on cybersecurity includes regularly patching software using trustworthy antivirus solutions and staying abreast of the latest in cybersecurity.

3. Be Attentive to Online Communications

Another thing you have to consider to be safe from malicious AI: Artificial Intelligence to be careful with online communications. You have to double-check unexpected messages, particularly those prompting financial actions or revealing personal data. You must use official communication channels or forums to verify any such requests.

4. Exercise Caution with Unknown Risks

FraudGPT has the skill to create phishing pages and URLs. So always keep in mind that you have to make sure that you know the source before clicking links or downloading attachments from the FraudGPT.

5. Quickly Act and Report

Suppose you receive any dubious content or any suspicious messages, so do not have to shillyshally. Just inform and report the relevant authorities or your corporation’s technology team.

Some Other Things you have to Consider to Protect yourself from this Tool

- Always contact firms directly via an independently confirmed number to check legality. Keep in mind that you do not have to use the contact data provided in suspicious messages.

- Scrap documents holding personally identifying or financial data when no longer obligatory.

- Must use the antivirus and anti-malware tools for your work.

- Keep frequently checking account reports for wary activity.

- Use strong, exclusive passwords that are not hard to crack, and use two-factor authentication (2FA) when authentication when accessible for all accounts. Keep in mind that not ever share passwords or codes sent to you.

What Benefits Will Cyber Criminals Get from FraudGPT?

People are intrigued by the potential of artificial intelligence chatbots, even those who have not tried them. Cybercriminals are looking for more ways to get benefits from AI (Artificial intelligence).

However, many well-known firms, including Apple and Samsung, have already executed restrictions on how workforces can use AI chatbots or tools within their particular roles. As per the reports, 72 percent of small firms fold within 2 years of data damage. Frequently, individuals only associate criminal activity with the loss of data.

So here we have described how cybercriminals are organizing FraudGPT and other AI tools to their benefit.

- Phishing Scams: The chatbot FraudGPT and other tools like this make trustworthy-looking phishing emails and messages. That makes you believe they are sincere, all in a bid to have you reveal details like login data or personal information like phone number and credit card information.

- Social Engineering: FraudGPT and other comparable tools can imitate human conversation. So, they can trap you into an incorrect sense of belief and make you divulge data you frequently would not.

- Malware Circulation: AI tools that are made for evil purposes are also skillful at spinning tempting text. That encourages users to click links or download attachments overloaded with malware.

- Fraudulent Activities: From fake documents to fake statements, evil AI tools and chatbots help hackers target individuals and businesses, leading to significant economic losses.

- Wrong Data: Tools like FraudGPT can frequently deliver inaccurate or false data, which cybercriminals could use to complete malicious attacks.

AI Discussion Keeps on Hyped

In addition to FraudGPT, other interesting AI advances have drawn discussion. Some are more safe, though possibly expressive of the era technology is heading into. Furthermore, social media platforms are throbbing when AI announcements a movie trailer created completely by it. The Genesis trailer shows a dystopian future suggestive of apocalyptic Science fiction movies of the past. The creators of the trailer used Mid Journey and Runway to produce the trailer.

However, others have keen out less apparent worries. A research paper has been developed discussing the charge of definite language use in language models. English is an inexpensive language, while others are far more affluent to train with.

FAQs

Q: Will AI replace cybersecurity?

Ans: To be honest, NO. AI (artificial intelligence) is improbable to replace cybersecurity experts entirely. While AI can handle repetitive and data-heavy tasks, human expertise is important for strategic decision-making, proper contemplation, and intricate problem-solving.

Q: How are AI tools troublemaking cybersecurity?

Ans: AI: Artificial intelligence tools like ChatGPT will not completely substitute security professionals anytime soon. However, it can subsidize productivity by programming threat detection, investigating data sets, and endlessly learning to find glitches and new attacks before they happen.

Q: What is the most common cyber threat?

Ans: The most common types of cyber threats are malware, phishing, DDoS (Distributed denial of service) attacks, DNS tunneling, SQL injection, Business email compromise (BEC), crypto-jacking, eavesdropping attacks, and IoT-based attacks.

Q: Why Is AI better than cybersecurity?

Ans: Artificial intelligence-powered resolutions can sift through huge amounts of data to recognize nonstandard behaviors and spot malicious activity like a new zero-day attack. Artificial intelligence can also power many securities developments like patch managing, making staying on top of your cyber security requirements easier.

Covering Up

The rise of FraudGPT demonstrates the danger of unregulated artificial intelligence development. Advanced propagative models can straightforwardly weaponized by the threat actors without appropriate governance. As demonstrated by tools like FraudGPT, intended clearly for composing cybercrimes.

To happenstance this threat, the AI community must arrange model transparency, responsibility, and conscience in development and arrangement. Moreover, to encounter this threat, security resolutions must participate in intelligent systems to bout the automation and scale achieved by such threats. Plus, with collaborative efforts on both fronts, the promise of AI can be harnessed while mitigating its risks.

I hope you find this article informative about the malicious generative AI tool known as FraudGPT. We have shared details of its features,

Let us know if you want to know more about the FraudGPT or any other malicious AI tool.